Ben's MPC Cheatsheet

wip, last updated August 19 2022.

|

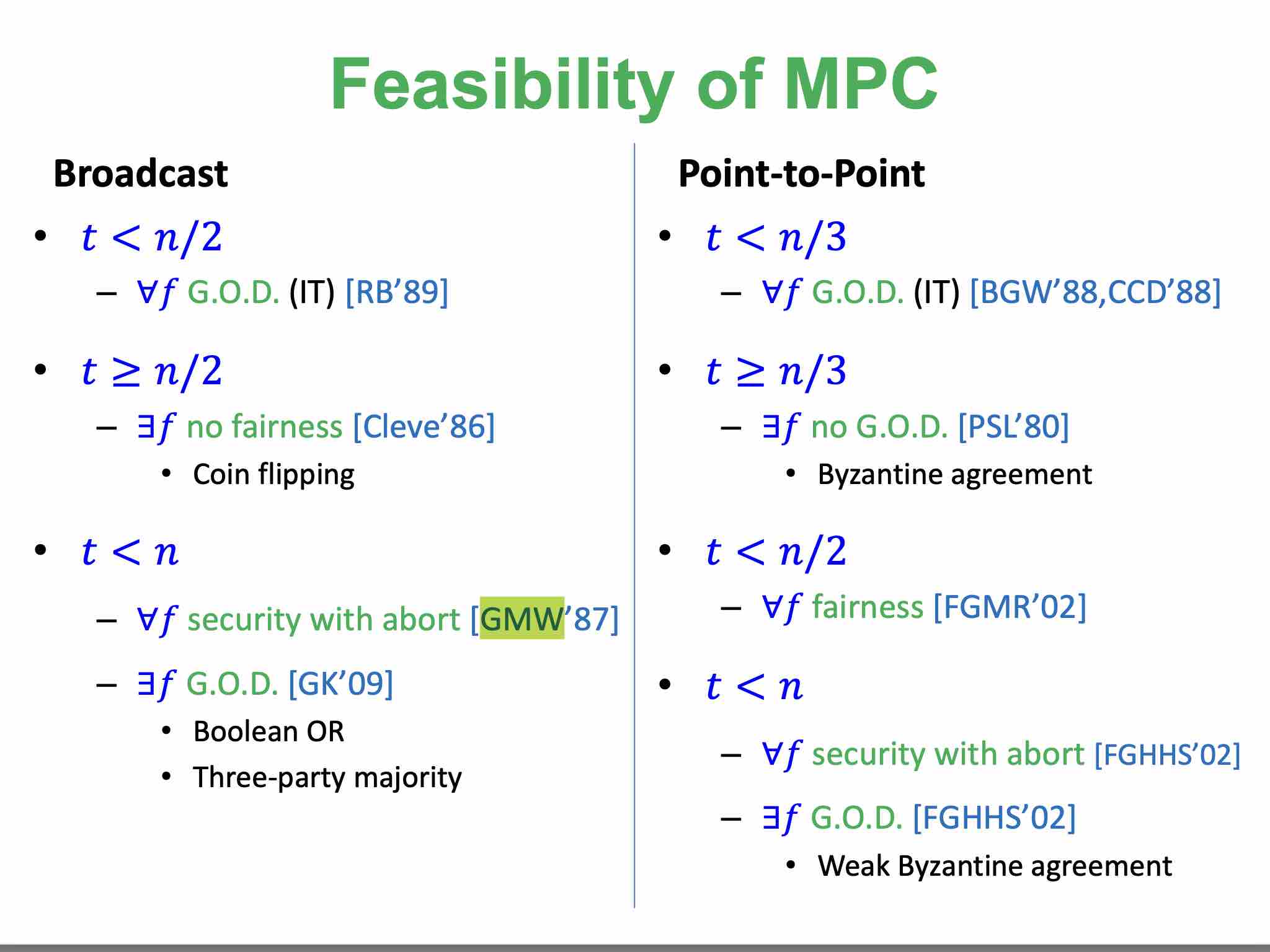

plain model t < n Personal thought on fault tolerance vs setup. I personally consider the fault tolerance parameter to be a setup assumption, which is why it is in the header. It should be equivalent to considering computation in some hybrid model. Arguably network synchrony should also be captured by a setup assumption or functionality hybrid model, and placed in the header. In my opinion, the security model itself should not deal with 'number of parties' and 'number of corrupted parties'—or really, the notion of parties in general; i.e. I think this is a limitation of the way we currently think about MPC. |

plain model t < n/2 On power balancing. Note that having a CRS, or a PKI, causes a loss in deniability: once a party signs something with their private key, and only they have access to that private key, then they cannot deny that they signed that message. This is in contrast to a world where a simulator also has access to that private key (or where there is no a priori fixed set of private keys). Note that \cite{CDPW07} (GUC) gives simulation with deniability. Note that in the GUC model, the simulator cannot "control" the honest global functionality. In fact, if the global functionality only provides public information, ZK is impossible. However, there are global functionalities that keep private state that make MPC possible. Trivially, for instance, we can realize ZK if there is a global ZK functionality. But that is a stupid assumption, and not very interesting. A weaker global functionality is a key registration functionality, that assigns parties public and private keys, and only allows corrupt parties to retrieve their private keys. We make simulation of a party possible only if the private key is known: i.e. targeted deviation from the honest protocol is only possible with the secret key. This gives deniability: I can claim that I got corrupted, and that someone who knows my secret key simulated me. Importantly, we make it impossible for the adversary to simulate honest parties by witholding the secret key of honest parties from the attacker. I think there is something weird; deniability contradicts non-simulatability directly, morally speaking. I must claim I am corrupted for deniability. But perhaps this is ok, when I want to absolve my actions in protocol A in the context of protocol B, where my corruption status in protocol B is independent of my corruption status in protocol A. But usually it's not independent... Again, all this is in \cite{CDPW07}." To me, deniability is a synonym for simulatability, and perhaps even a better interpretation of security. That is, security occurs when any behavior that supplements the "wanted/ideal behavior" can be "denied"; in other words, it could have done by "someone else" - anyone else, without secret input, or access to some special resources. AKA "it wasn't me!!". The key impossibility result is that if the adversary can simulate honest parties without corrupting them, then zero knowledge is impossible. That is, suppose we can simulate $\Pi(x_1)$ using $\Ftaker(x_1) \lr \Sim_1$ where $\Sim_1$ does not know $x_1$. Likewise $\Pi(x_2) \approx \Ftaker(x_2) \lr \Sim_2$. Then $\Pi(x_1) \lr \Pi(x_2) \approx \Ftaker(x_1) \lr \Sim_1 \lr \Sim_2 \lr \Ftaker(x_2)$, and by virtue of $\Ftaker$ being a "taker", then $\Sim_1 \lr \Sim_2 \lr \Ftaker(x_2)$ must extract $x_2$ and send it to $\Ftaker(x_1)$. For a functionality like zero knowledge, this is a contradiction, since the environment can run $\Sim_1$ to extract $x_2$ from the honest party $\Pi(x_2)$. Thus, for feasibility of MPC there must be a Power Imbalance (\cite{CKL03}), where the environment cannot run (one of) the simulators. So SPS is actually pretty strong and probably not necessary.. Claim: Yes, security should imply extractability. Why is this the right definition? See \cite{CKL03} for a reference. First, mechanically, the simulator maps the adversarial strategy to an input for the ideal functionality. But let's intuit the claim in the framework of deniability, since we want our definition to be morally correct, and the implication to be more than a technicality. Recall that the "simulator" must be able to convince any environment that "it wasn't me". Correctness stipulates that some behavior occurred: for instance, something was proved in Zero Knowledge, and for instance, there must have been some witness (i.e. it is a proof of knowledge, as stipulated by the normal UC ideal functionality). Thus, deniability on top of this correctness requirement must involve being able to generate a witness for the output proof, that is, "I wasn't the one who generated the proof, but someone else did, and moreover they must have done it in a valid way, with a proper witness, if the verifier runs the correct code." (if the verifier doesn't run the correct code, do we make a stipulation???) The "validity constraint" is enforced by ???. |

plain model t < n/3 |

CRS model t < n |

|

|---|---|---|---|---|

|

synchronous n.u. computational ℰ The Environment ℰ. Here, I use ℰ to denote the environment. We take ℰ to be non-uniform to match [Canetti01], though [HMS03] observes that UC composition holds for uniform ℰ as well, as pointed out by [CKL04]. UC-secure Recall that for UC security [Canetti01], where a protocol P implements functionality F, we show that there exists some 'structured' uniform PPT simulator S, s.t. for every environment ℰ, for every security parameter λ, BinOutℰ(1λ, P ↔ ℰ) ≅ BinOutℰ(1λ, F ↔ S ↔ ℰ). The symbol ≅ denotes statistically indistinguishability. Recall that in an interaction P ↔ ℰ, ℰ picks all n inputs for P, sees all n outputs, and can also alter the behavior of P in some way corresponding to the allowed adversarial behavior (typically bounded by t, and characterized by backdoor communication in UC). BinOutℰ(1λ, P ↔ ℰ) then denotes the output of ℰ—which must be binary—in such an interaction on input 1λ. If the output weren't binary, then the computational power of ℰ doesn't matter. Finally, the simulator S is 'structured' in the sense that it must still allow ℰ to directly pick the inputs and view the outputs of F (but on the other hand, it acts as a 'filter' on backdoor communication). Notes on the Restricted Model of UC (from Section 2 of [Canetti01]). In the restricted model, ℰ is not allowed to invoke session IDs at will; instead, it is restricted to "external IDs" specified in the "communication set" of the protocol ahead of time; that is, each protocol is statically assumed to communicate with a fixed set of parties ahead of time, and ℰ may only choose from this set of parties. This is in contrast to full UC, where ℰ may essentially choose the code and IDs that it is simulating. In the restricted model, capturing UC with global subroutines/setup (see the footnote on EUC) is much easier (e.g. [BCHT20]) as long as we allow input/output access to the global subroutine by the environment (like in EUC); this is because we can make the composition theorem such that we assume the ideal protocol and real protocol have the same set of "external identities" or "calling identities", so proving UC-emulation directly proves that the real protocol can be called in the same context as the ideal protocol. In contrast, in a setting with dynamic identities, the definition of UC is fragile (but the intuition is still easy), as they depend heavily on the notion of subroutine, disallowing multiple instances of some parent protocol from sharing a subroutine. This is a flaw of UC, rectified in part by [BCHT20] which allows ℰ to access the global subroutine and parent protocol through a "management protocol" (reminiscent of our structured simulator), but I see no reason why there should be any trouble at all with the modelling in the first place (i.e. why not just allow a CRS functionality in the first place, and just argue real+CRS emulates ideal+CRS?). Opinion: in this case, the formalization of UC is a barrier to understanding. For instance, an alternative (I think) would be just to quantify over all possible external identities when proving UC-emulation. is anything wrong with this? Why is dynamicism hard? Suppose that the environment is allowed to choose from any set of external IDs, and not some limited static set. (In the static, limited setting, the "set of parties" with whom any individual machine can communicate with is fixed ahead of time.) In particular, we don't want to fix the communication set ahead of time (Why not?). Why is this hard? The main trouble is that "inner protocols" can invoke any identities that they want. Without constraints such as "subroutine respecting", this means that a protocol may be talking to the outside world in a way not captured by the interface between the ideal functionality and the environment. The key point is that we want the ideal interaction to capture a "dynamic" real interaction. When new parties are constantly joining the system, we don't want to have to update the ideal interaction to compensate. It's not really that much more complicated: we just have to define what it means to be "subroutine-respecting", and define what the composition operation means (when the inner protocol can invoke a lot more parties.) |

Commitment schemes

impossible [CF01]

Proof sketch for impossibility of 2-party commitment when either party can be corrupted (i.e. with both binding and hiding) [CF01]: See the definition of the functionality below. First, consider corrupted C (committer) and honest V (verifier). The environment just runs the commitment code. Note that V must get a receipt message from Fcom before the underlying bit is revealed, by definition of the functionality. This is hard to simulate! Intuitively S must send Fcom some commit message (to simulate V getting a commit receipt) before the commitment is even opened, and thus must "extract" the correct bit from the commitment. This seems impossible, so let's formalize the intuition now.

Proof by contradiction. Suppose such an S did exist (for the corrupted C above). Then, consider an honest committer C that commits to a random bit and corrupted verifier V. V now runs S on the transcript from C (using V's view), and simulates Fcom in the view of S, using S to "extract" b from C's messages. V outputs b. Contradiction because V's view is independent of the real b. Why is it possible in the 1-shot world? This is a todo. I'm not sure. . Commitment schemes imply strong ZK, so strong ZK impossible? UC Commitments implies strong UC Zero Knowledge. todo Personal thought on impossibility of commitments and ZK in 2PC setting. I personally suspect the modelling is wrong -- that the definition of ZK should not be allowed -- that even in the plain model everything should be possible, and that ZK is just misformulated. Even self-composition is impossible [Lin04] in some cases. Concurrent self-composition (see [Lin04]) refers to running multiple copies of the same protocol -- and no other protocol. |

|||

|

synchronous arbitrary ℰ UC-secure |

todo | todo | todo | todo |

|

asynchronous n.u. computational ℰ UC-secure |

todo | todo | todo | |

|

asynchronous arbitrary ℰ UC-secure |

todo | todo | todo | todo |

|

synchronous n.u. computational ℰ Here, ℰ again denotes an environment. There is no inherent need that it should be non-uniform (I think?). Note that most treatments of 1-shot security (e.g. see [Lindell17]) do not bother with an environment; we follow [Canetti06] and introduce one to match the mental model of UC. 1-shot-secure Also known as standalone security. TODO 2. forgot about adversary's output. Is this captured in the output of the corrupt party? Another todo: PPT adversary, but statistical indistingusihability? OR unbounded adversary, statistical indistingusiahbility? In [Canetti00], usually A gets more power too. In statistical ZK, usually both V* and S are PPT (see [GO94]). I guess if V is unboudned it could just compute the witness in ZK, but that doesn't generalize. It also seems that Salil Vadhan defines statistical ZK to be unbounded verifier ZK (and it requires black-box simulators). When the V can be unbounded yet Simulated I think it's also called "strong simulatability". Recall that for 1-shot security, where a protocol P implements some functionality F, we usually show that for every PPT attacker A, there exists a 'structured' uniform PPT simulator S s.t. for every environment ℰ, for every security parameter λ, BinOutℰ(1λ, P ↔ A ↔ ℰ) ≅ BinOutℰ(1λ, F ↔ S ↔ ℰ). In an interaction P ↔ A ↔ ℰ (or F ↔ S ↔ ℰ), ℰ picks all n inputs for P and A (or F and S), sees all n outputs, but unlike in UC security, ℰ only chooses the parties inputs and sees the outputs, and has no other interaction with A (or S). In other words, ℰ does not see or influence the backdoor interaction between P and A (or F and S). Thus, a dummy attacker A would not be very interesting in this setting (or rather, disallowed). Note that the traditional definition says that we can simulate the entire "view" of A -- the view being the coins of A, the input, the output, and the messages received by A; note this is not equivalent to simulating another party with whom A interacts (that is more UC-esque). This makes sense when another algorithm M uses A; instead of running A to verify a Zero Knowledge proof, for instance, M can just run the simulator in lieu of A to put itself in the same state, without interacting with the prover on A's behalf. todo: write something about black box S? is A always PPT? is S always PPT? . Also, what happens if S is not PPT? Should we be interested in non-PPT S? (Yes, see SPS, rafael's work...). Black-box? So many notions... can we simplify? |

|

All functionalities, malicious, with guaranteed output delivery Guaranteed output delivery, informally, requires that all parties always obtain outputs, and ℰ cannot abort the execution [CL17]. It implies fairness. , in broadcast channel hybrid model [GMW87]. | todo | todo |

|

synchronous arbitrary ℰ 1-shot-secure |

todo |

All functionalities, semihonest [BGW88]. All functionalities, malicious, broadcast channel [RB89]. perfect security? |

All functionalities, malicious [BGW88, CCD88]. perfect security? | todo |

|

synchronous n.u. computational ℰ 1-shot-secure super-polynomial SSuper-polynomial Simulators. todo add definition. Note that SPS security does not preserve composition. It preserves concurrent self-composition (where the protocol that is proven secure is run concurrently with other instances of the same protocol, and no instances of any other protocol. Moreover, parties play the same roles, and it's always the same party set), but on the other hand, general composition does not hold; see [CLP10] for a reference. If there is only one instance of the protocol (with a superpolynomial simulator) to "replace", then composition holds, since up to that point there is no superpolynomial machine in the system that the environment must capture. If there are multiple instances, and an environment, then composition no longer holds. First, it seems that the dummy lemma is true w.r.t. SPS (verify). Now, when proving a composition lemma with multiple instances of the real protocol, we will have to swap them one at a time for the ideal protocol via hybrid argument. But suppose we had already previously replaced some real protocol with an ideal one in the view of the environment: in particular, the environment is now interacting with a superpolynomial-simulator in this experiment. In order to swap another real functionality for ideal, to apply UC-emulation the new environment must simulate every other party in the experiment, including this SPS that is present because of a previous swap. Why does concurrent self-composition hold? Here, note that the party set and party roles do not change; the same parties are executing the same protocol many times concurrently (for details, see [Lin03]). I don't know... figure this out. |

|

todo | todo | todo |

|

synchronous n.u. computational ℰ UC-secure super-polynomial S Consider the framework of Externalized Universal Composability or EUC [CDPW07]. This is a part of a line of work that tries to formalize the notion of composable security in the context of some global setup (e.g. PKI or CRS), which is problematic since the global setup affects composition (e.g. UC-secure ZK using CRS may not be deniable [Pass03]) if the attacker uses the same setup. To deal with this, EUC models the setup as a global entity, to which the environment has access, that lives in both the real and the ideal worlds -- that is, it interacts with the ideal functionality also (Thus, now the simulator cannot choose or rewind the global setup). Commitment in this model remains impossible if the setup gives only public information; but allowing private keys in the global setup, or some interactive global setup, any functionality can be realized. Note that in UC, protocols cannot share state with one-another (they are invoked in some tree-like structure, and cannot invoke protocols "outside"; see [CDPW07] for details. The name for this is subroutine respecting, and it is really incredibly ugly, and a consequence of not designing ideal functionalities that capture the leakage of global shared state). In other words, the environment only gets state from the "challenge protocol" through the adversary. "H-EUC" explicity gives the environment access to shared state (like a CRS) by simply modeling it as an additional "shared functionality" H (for instance, H is the CRS). H typically talks to the ideal functionality/protocol, the attacker/simulator, and the environment, all with direct input/output relationships. So it looks like, there exists S, s.t. for every ℰ, for every λ, BinOutℰ(1λ, P+H ↔ ℰ) ≅ BinOutℰ(1λ, F+H ↔ S ↔ ℰ ↔ H), but importantly, S in the second experiment is structured: it must allow ℰ to talk and interact directly with H (it being real communication, that S isn't in a position to filter. Else S could choose the CRS). This is the distinction compared to regular UC. Now, to deal with SPS UC-security or angel-based security (see [PS04, CLP10]), we put the superpolynomial power all into an angel "H", but design H s.t. it does not talk with the protocol/ideal functionality, but still talks with the environment, and A/S. We are able to choose H, for instance H could be a helper that opens a commitment without knowing the secret ahead of time, in superpolynomial time, but only if invoked on commitments by corrupt parties (a la [CLP10]). The point is that H is designed to make it easy to break the security of corrupted parties (thus easy to simulate), but hard to break the security of honest parties (so the protocol remains secure. If the environment gets more power in an unlimited way, impossibility of ZK and commitment would still hold). Unlike normal SPS security, here the attacker/Environment also have access to H (as opposed to just the simulator having access). Impossibilities are circumvented since H knows which parties are corrupt. Also note that H will never show up in the protocol spec; it is a technique for security analysis only. Why is this notion meaningful? Mostly, angel-based security implies SPS. In SPS, if an attack can be run, we can break some sub-exponential assumption (or otherwise the ideal protocol in super-polynomial time). With an angel, the starting attacker has the option of being superpolynomial as well, but H cannot empower it so much as to break the protocol (otherwise the protocol might as well be information theoretic secure). Note, composability holds fixing an angel H. So H basically formalizes the "S/Env power imbalance" (e.g. to simulate A's commitments, but not let Env break honest ones) required for security, in a composable way. |

todo | todo | todo | todo |

|

asynchronous arbitrary ℰ 1-shot-secure |

todo | todo | All functionalities, malicious [BKR94]. Note perfect security is possible for t < n/4 [BCG93]. | todo |

Additional notes from Ben:

failstop, see FHM99

Every ITM -- or security game, if you will -- can be characterized as a distributed algorithm, and vice versa. Thus it is easy to build distributed algorithms, by just showing an ITM. I.e. given functionality F (i.e. consensus functionality), does there exist S (of some structure), satisfying our security/realization property? Does such an S imply a protocol P? What structure does S need to have? S needs to give ℰ some surface area, something to attack, change... S needs some 'robustness'...

Ideal Functionalities

note, we ignore complexity of UC, omitting public delayed outputs (todo maybe understand this better). todo put footnotes in own section. I also omit session ids (I think they should really go into the security model, not def of functionality). What about party ids (if static...)| todo |

|---|

FZKR —Zero Knowledge [CF01]Parties pid = 1 ... n. Backdoor S.

***Another random note [GO94], since I don tknow where to put it. Note that a black-box simulator for zero knowledge (using the corrupted verifier as a black-box) implies a simulator for auxiliary input zero knowledge (i.e. if the corrupted verifier gets an auxiliary input, we can still simulate its view, where the "view of the verifier" comprises the input, input coins, aux input if applicable, and replies from the prover.) |

FZKR,R' —Zero Knowledge, Relaxation [CKS11]doesn't send m to S immediately, and instead only sends some leakage of m. Also allows another relation R' saying that corrupt provers are allowed to prove using witness in larger set R' instead (capturing soundness gap---i.e. we can only prevent most bad proofs, but not those in R'. Soundness is like binding (or extractability).) |

FNIZKR —One Message Zero Knowledge [GOS12]not deniable; it is transferable. |

FCOM —Commitments [CF01] hmmParties pid = 1 ... n. Backdoor S. sid distinguishes multiple copies of Fcom.

|

FKE —Key Exchange (2 party) [todo] hmm2 parties. No inputs? Both parties get the same random key? |

FOT —Oblivious Transfer (1 out of 2) [todo] hmm2 parties. $f((m0, m1), b) = (\bot, mb)$

|

Additional Thoughts

- Consider a generalized notion, where we say a protocol P implements a 'specification' F, iff . Observe that this generalizes the notion of backdoor interaction from UC, and incorporates it as part of the specification F. Both P and F are ITMs; any ITM can also be interpreted as a specification.

- Is there a separation between UC-security and 1-shot security? (note that commitment schemes are not one; under our definition of 1-shot, they are still impossible? No, this statement is wrong, assuming they allow attacker to be corrupted?)

- OT seems equivalent to honest majority, to some degree. i.e. honest majority MPC is in minicrypt (is this statement true for cosmic?). (necessary for 2 party, see Kil88)

On Exchange

- See [CC00] for citations on fairness and fair exchange (Silvio has a paper too). Mentions Cleve.

On CRS and Simulator Power

- Why does CRS enable 2 party UC secure computation, and why does it enable NIZKs? Because now the Simulator can pick a fake CRS that is ``consistent'' with the distribution it wants to simulate, s.t. the fake CRS does not have to be generated honestly. It can be picked after the fact, for instance.

- Why is the simulator allowed to output the CRS? (Note that the simulator takes just the instance/input x as input; it outputs the CRS and the protocol view.) Indeed this is a limitation; the proof of security touches the CRS; the CRS cannot be reused; we lose deniability for instance [Pass03]. Intuitively, this is only zero knowledge, if the verifier never touched the CRS previously.

- A CRS may be too powerful; alternatives exist. In [BCNP04], they show that a ``key registration'' service is sufficient for 2PC to replicate all of the feasibility using a CRS (namely, UC 2pc.) The service picks secret keys for honest parties, and allows corrupt parties to choose their own secret keys. This allows for self-simulatable simulators (that don't need extra power over some external CRS) for verifiers that pick their own secrety keys. Generally the Prover proves they either know a witness, OR they know the verifier's secret keys, using WI. Of course the verifier does not know which is actually the case. Doing this WI proof requires the verifier's public key be correctly computed from the secret key (hence the key registration service). Soundness (prover can't cheat) follows since the prover shouldn't know the verifier secret key. But here, the simulator knows the secret key of the verifier, as it is the verifier doing the simulation.

- Intuiting the above. So, it seems that the simulator does not need to be much more powerful than the corrupt party. In fact, extending [BCNP04], [DPW05, a manuscript] shows that as long as the attacker is structured in a certain way, e.g. revealing some specific ``leakage'' of its secret information (that is, the public key corresponding to this secret), then a protocol can harness this weakness, and become straight-line simulateable in the view of this attacker. It's unclear if the secret needs to be sampled according to some distribution: I think not.

- What about broadcast? Most MPC protocols assume broadcast channels. Perhaps unintuitively, under asynchrony, in [GL05], they show that for UC two party computation, we don't need broadcast. This is surprising, since consensus requires/is broadcast, and MPC usually implies consensus. But I guess without fairness, consensus is pretty useless, and trivial, so there is no contradiction here. In [GL05], parties just wait to receive messages from every other party. Certainly the attacker can stall the network; but they can stall the network in the ideal world too and prevent an honest party from receiving any output. On Liveness. We might be interested in situations where some parties `crash' but the others still get messages; we want to make progress in such a scenario. In the real world of [GL05] there would be no progress (but it would be a ``fair exeuction''); to simulate this in the ideal world means never delivering a message to an honest party, which is no longer a ``fair execution''. A better notion of simulatability/security would somehow capture these liveness properties that we want.

- A two party security model that captures liveness. Allow one of the adversaries to act honestly and do fair message delivery?

- So my question: Is there a `minimal' functionality F, s.t. any extra functionality that we can use to achieve full simulatability, implements F? In particular, we want to prove that such an F is necessary, and cannot be ``stripped down'' further.

On Abstract/Constructive Cryptography, and Compiling Resources

- Question: What are the minimal ideal resources that we can compile into any resource? That is, what is a complete resource?

- The idea is that message delivery, etc, we put into the protocols that the two parties on each side of the channel run -- no more mucking about modeling constraints with output delivery, or the ideal functionality.

- Note that this seems remarkably similar to Constructive Cryptography [MR11, Maurer11]. Also see Collusion-Preserving Computation [AKMZ12], Universally Composable Incoercibility [UM10], and more, which are all instances where universal composability fails to guarantee the strongest possible notion of security. For Abstract/Constructive Cryptography, see [PR22] for a more readable synthesis (also for some survey of relevant quantum phenomena). Note that Constructive Cryptography (appears to be) is still player specific -- and each interface must be enumerated, and are the quanta of the security framework.

- In particular, in Constructive/Abstract cryptography, the distinguisher sits at all the interfaces (e.g. all of Alice, Bob, and Eve), sometimes mediated by a simulator (e.g. for just Eve). In that sense, it is like the environment: it can pick the input, and see the outputs. Statements are often made of the form, for all "subsets of interfaces", if those subsets are mediated, and the complements (have the option to) run some protocol, we (can) still have indistinguishability.

- Sometimes Constructive Cryptography [Maurer11] requires two statements for the security of some primitive. For instance, take some arbitrary protocol with both soundness and completeness (e.g. QKD, in [PR22]). For soundness, any outcome generated by an adversary must be good, but an adversary might just abort. But for completeness, it says if there is no adversary, then the protocol should not abort. Constructive Cryptography (at least in [PR22]) models this by using two different statements, on two diff resources, one that exists when the advesrary exists, and one that exists when the adversary is not there. They realize two diff functionalities: one that allows abort, and one that does not. So the same protocol realizes two diff functionalities with 2 diff resources. Also see Section 5.1 in [Maurer11] for another explanation.

- Computational Constructive Cryptography. See [Maurer11]. Essentially, by picking a specific instantiation of the distinguisher class and resource class (that is feasible [MR11], e.g. nuPPT ITMs), a specific instantiation of the protocol implementation/"converter" class (that is efficient [MR11]), and distance metric (for security) (e.g. computational indistinguishability), then we can reason about concrete (that is, compared to abstract) security. That is, the theorem statements will hardcode the domains from which we choose each entity.

- On Abstract Cryptography. Abstract cryptography is then just a series of definitions for general terms, like composability, that apply to any choice of computational model, etc, namely at a higher level of abstraction. Is this useful? My personal opinion is: not a ton, since we can't write a lot of theorem statements in the abstract setting; we must realize them anyways. Let me try to summarize (the nuances of) Abstract Cryptography:

- Specifications: Instead of just a resource R "realizing" an abstract resource S, we have a set of resources curlyR "realizing" an (ideal) set of resources curlyS. The set of allowed converters for each element of a set of resources curlyR might be different, perhaps even disjoint. A guaranteed choice for a set of resources curlyR is just a converter that can be applied to any resource R in curlyR. A Specification is then a set of resources curlyR, along with a set of guaranteed choices for curlyR.

- On Real Specifications Realizing Ideal Specifications: A "real" specification curlyR realizes an "ideal" specification curlyS under a mapping M if (1) any guaranteed choice for curlyR gR can be mapped to a guaranteed choice fo curlyS using M(gR), where the mapping is done locally for each party, and moreover, (2) for any R in curlyR, there exists S in curlyS, s.t. for every gR, applying gR to R is indistinguishable from applying M(gR) to S. In other words, no matter what concrete implementations we are dealing with for curlyS and curlyR, we can always indistinguishably map a choice for R to a choice for S, where both choices are allowed because they are "guaranteed". Note that "real" and "ideal" have no meaning here, and I added the terms just for intuition. ([MR11] additionally stipulates that every guaranteed choice for curlyS gS must also be mapped to some guaranteed M^{-1}(gS) for curlyR, but there is no requirement of indistinguishability here when applying gS and M^{-1}(gS) to concrete implementations of the specifications. But it must be that for gS, there exists a choice r that when applied to R, it looks like gS applied to S, where r can depend on R. This seems extraneous to me!)

- Filtered Specifications: let c be some converter (formally, defined interface-wise, one per interface). Let R be some resource. Denote R_c to be the set of all resources comprising R attached to some (any) converter suffixed by c (note that a resource + a converter is still a resource). That is, R_c is the set of resources [dist] <-> X <-> c <-> R, enumerating over X. So c is essentially a "constrained R", and we usually choose c s.t. we know honest parties must be able to interface with R through c.

- The Main Theorem: Abstract Cryptography really only proves one theorem (Theorem 2 in Section 7.4 in [MR11]). The statement is the following. Let c, c', Protocol, Sim be converters. Let R and S be arbitrary resources, and constrain ourselves to what we know is possible for honest parties, namely R_c and S_c'. Suppose that for any R' in R_c, there exists an S' in S_c' such that the following is true: for any set of corrupted interfaces, when the honest interfaces run [distinguisher] <-> Protocol <-> c <-> R', this is indistinguishable from when the honest interfaces run [distinguisher] <-> c' <-> S' and the adversary runs [distinguisher] <-> Sim <-> S', for all distinguishers (which can run all converters). Note that this S' allows the attachment of Sim (which is not guaranteed by S' being in S_c'; in other words, the distinguisher is not restricted by c'). Then, the theorem states: R_c must realize the ideal specification S_c'. Note that for the latter to be possible, because we map converters (for honest parties) for the real world to converters suffixed by c', all converters sufficed by c' must be guaranteed/allowed in the ideal world for honest parties. The proof is simple. If the converter in the real world follows the protocol [dist] <-> Protocol <-> c <-> R, then map it to [dist] <-> c' <-> S. (Allowed for honest parties). If it follows some arbitrary behavior X (using c) like [dist] <-> X <-> c <-> R, then map it to [dist] <-> X <-> c <-> Sim <-> S (allowed by the distinguisher). All of these choices are guaranteed by the strong (and useless, I think) definition of filtering. Restating the Theorem Statement in accessible terms: Ignore filters c and c' for now. Suppose that R+Protocol ~= S. BUT, say the distinguisher also has direct access to R for an arbitrary subset of interfaces, without running the Protocol. Say that we can still replace R with S for these interfaces but we need to attach a Sim in the ideal world. THEN, for any action for real R, we can still map it to an action using Sim for ideal S, or using nothing at all if the original action ran the Protocol. I think it's a tautology. I guess the point is that, to prove security, it suffices to enumerate over all "corruption sets", and show local simulators for each party in the corruption set. Another takeaway is that Simulators are just a proof technique, for dealing with unknown behavior, and prespecified honest behavior. But it may not necessarily be true that honest behavior is specified so rigidly, for instance.

- Impossibility of UC commitments: Consider a resource Com that takes in a value v on the left side, outputs "commit" on the right side, then takes a message "open" on the left side, and outputs v on the right side. Note that for all converters c, COMcCOM != COM, because the second COM will not get the message from the first COM, and thus outputs the wrong message. But say that C realizes COM; namely there is a protocol p1, p2 s.t. p1Cp2 = COM, and that in the case of corruption, there exists s1 and s2 s.t. p1C = COMs2, and Cp2 = s1COM. (This is Theorem 2, but backwards. The proof of Theorem 2 backwards seems pretty wrong and is opinionated about protocols, unlike before. It seems like the antithesis of the point of the paper.) Then p1p2 = COM as C is just a communication channel. Now, COM = p1p2 = p1CCp2 = COMs2s1COM which is a contradiction.

- Weirdnesses of UC: it is incredibly odd to me that ideal functionalities can take input from both the attacker (via corruption via backdoor), and environmental input to a dummy party. Moreover, this is meaningful even if F does not change its behavior based on a corruption. In particular, the impossibility of UC commitment proof is impossibly confusing, because it is not the environment feeding input into Fcom -- it is the simulator.

- Personal opinion from Ben: The Theorem above is more of a definition that is not very useful. It tells us why the simulator paradigm might be interesting in the context of assuming "honest parties" and "honest protocol execution" and local simulators -- i.e., it has abstract relevance as well -- but not why the abstract paradigm is interesting, for Theorem 2 backwards does not seem to be true without assuming on/off binary honest/malicious restrictions on party behavior. For instance, the abstract paradigm could just admit a monolithic simulator; I don't see why not, it is just a definitional choice (e.g. the rigidity of converters, why not have converters that mix two inputs and output two outputs?) that is also completely unmotivated in this paper. So what if the distinguisher can explain behavior by self-collusion? Nor does it capture the game theoretic motivation of all rational players. This whole theory is a notational, buggy, underspecified (in some cases) mess. Some good ideas (e.g. local simulators), but abstraction in general seems pretty useless (and the good ideas were generally covered in other work, such as collusion-resistance).

- For Arbitrary Corruptions: meh. CC is opinionated and unopinionated at the same time. It's a lot like working with UC. See [JM20] and [HLM21] (and new, different summaries of CC. It's quite hard to pin down what this CC framework is exactly. It's like an octopus. Can squeeze through any hole.)

On Network Models and UC

- On Synchrony: Universal Composability in and of itself does not capture any liveness guarantee; any liveness guarantee must be either appended to the model, or written into the ideal functionality. [KMTZ13] argues (as I believe) that the best way to do this is to indeed bake it into the ideal functionalities; else in vanilla UC without special network interfaces for the attacker, honest parties essentially can invoke other honest parties as subroutines (is this true?). (I also think that this subroutine heirarchy is somewhat unnecessary -- it would be better to trace messages as queries that generate other queries, and eventually a reply).

- On Corruption Models: Note that I personally think of corrupt parties as "ideal functionalities" that allow for total corruption. And network adversaries are just "ideal functionalities" that allow for partial corruption (to enforce delta-bounded delay, or fair executions, that sort of thing.) For example, we can interpret [CV12] as highlighting that the network adversary is different than the adversaries corrupting local parties, and so we must stop collusion between the network adversary and party corruptions in their setting (as opposed to [AKMZ12], which only stop collusion between parties, and allow collusion with the network adversary).

Concurrent Zero Knowledge with Timing

- A Summary: [Goldreich02] [DNS04]

Proofs of Knowledge?

- Why proofs of knowledge? Sometimes, deciding a language (for instance in TFNP) is easy -- there is always a witness in the corresponding relation. Then, a simple zero-knowledge proof (or zero-knowledge argument) would be vacuous. But, perhaps, this witness is hard to find, or hard to compute; then, a zero-knowledge proof of knowledge, defined via an extractor with some knowledge-error, is meaningful, since the prover convinces the verifier that it actually knows some witness.

Cooperation Theory

- Q: How do we measure cooperation? Todo

- Q: How do we measure cost of cooperation? Todo

Todos

- Read setup in ZKSnarks https://medium.com/qed-it/diving-into-the-snarks-setup-phase-b7660242a0d7 out of curiosity

- Todo (Bell's THeorem) watch https://www.youtube.com/watch?v=1nh-pjxnM4I out of curiosity.

- Why doesn't UC capture liveness (partial synchrony?)

- Todo Read https://staff.fnwi.uva.nl/u.endriss/pubs/files/ChevaleyreEtAlSOFSEM2007.pdf Computational Social Choice survey

- Read https://arxiv.org/pdf/2208.07006.pdf Open-Source game theory (for fun)